[This is the text of my presentation at the Digital Classics Association panel titled Making Meaning from Data at the 146th Annual Meeting of Society for Classical Studies (was American Philological Association) in New Orleans. Unfortunately I wasn’t able to make the conference, so I’ve recorded it. ]

Digital Study of Intertextuality

In this paper, divided in two parts, we consider two approaches to the digital study of intertextuality.

The first one–that was just presented–consists of developing software that allows us to find new possible candidates for parallel passages.

The focus of my talk is on the second approach, which consists of tracking parallel passages that were already “discovered” and are cited in secondary literature, meaning commentaries, journal articles, analytical reviews, and so on and so forth.

What I’m going to present today is essentially what I’ve been developing during my PhD at King’s College London at the department of Digital Humanities.

The Classicists’ Toolkit

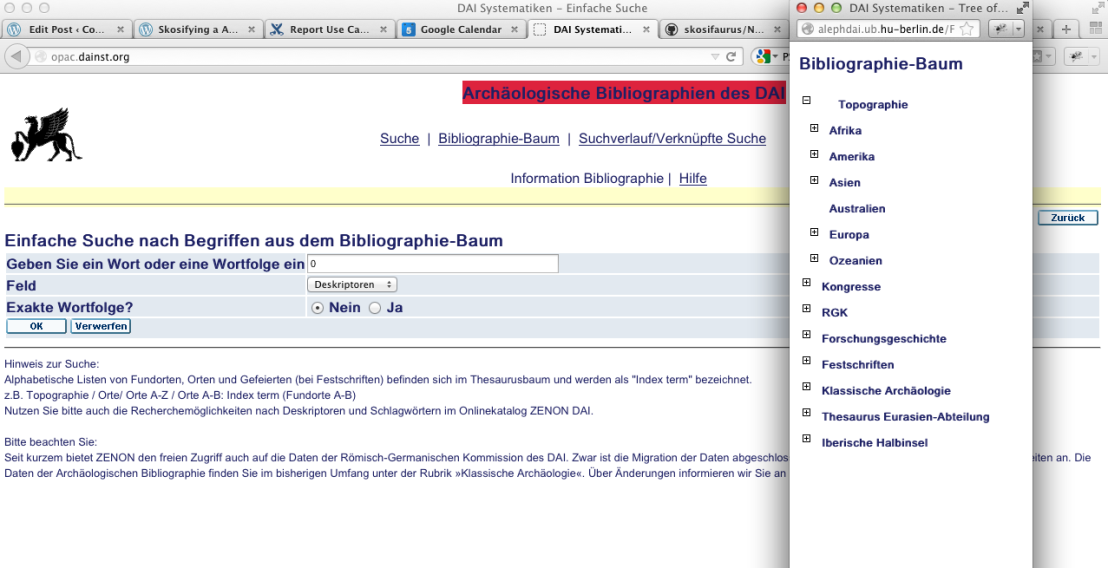

The Indexing of citations in itself is nothing new: it has been done for centuries in different forms such as indexes of cited passages at the end of a volume–the so called “indexes locorum”–and subject classifications of library catalogues.

The main problem is that creating an index of citations which is accurate and at the same time very granular is extremely time-expensive. (and by granular I mean precise down at the level of the cited passage)

Therefore, the tools that are more precise and granular usually cover a smaller set of resources, whereas the tools with high coverage–such as a full text search over google books for example–are less precise and less granular. The automatic indexing system that I’ve developed tries to combine together high coverage and fine granularity. In its current implementation the system is not 100% accurate but this is something that can be improved in the future.

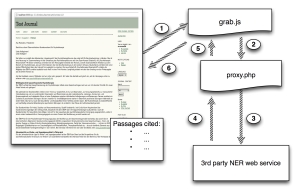

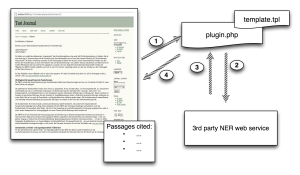

Citation Extraction: Step 1, (Named Entity Recognition)

Before considering the result of the automatic indexing, let’s see very briefly now how the system works.

The first step is to capture from a plain text the components of a citation, which are highlighted here in different colours.

Citation Extraction Step 2: (Relation Detection)

The second step is to connect these components together to form citations. “11,4,11” and “11,16,46” for example, both depend from the reference to Pliny’s naturalis historia. Each of these relations constitutes a canonical citation.

Citation Extraction Step 3: (Disambiguation)

Finally each citation needs to be assigned a CTS URN. CTS URNs are unique identifiers to refer to passages of canonical texts (Charlotte Roueche, if I remember correctly, once defined them as Social Security Numbers for texts).

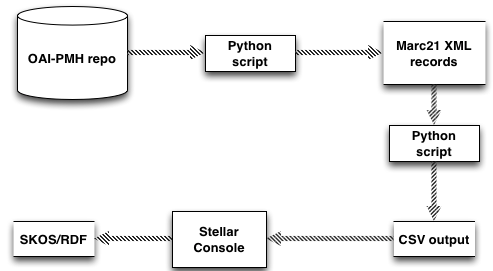

Mining Citations from APh and JSTOR

I’ve used this system to mine citations from two datasets: the reviews of the L’APh and the articles contained in JSTOR that are related to Classics. I don’t want to go into the debate concerning big data in the Humanities. But the data originating from these two resources was already too big for me, given the limits of a PhD, so I’ve selected two samples: for the APh I worked on a small fraction of the 2004 volume–some 360 abstracts for a total of 26k tokens and 380 citations–whereas for JSTOR I’ve focussed on one journal, Materiali e Dicussioni per l’analisi dei testi classici–which alone contains some 660 articles published over 29 years for a total of 5.6million tokens.

From Index to Network

The digital index that is created by mining citations from texts is not substantially different from indexes of citations as we already know them. Well, the scale is different, given that such indexes can be created automatically from thousands of texts.

But at the same time, the fact of representing this index as a network changes radically how we can access and interact with the information it contains.

The main difference, which is especially relevant for the study of intertextuality, is that cited authors, works and passages are not shown in isolation as in an index, but the relations that exist between them can be measured, searched for and visualised.

From Texts to Network

The citations that are extracted from texts are transformed into a network structure. In order to analyse patterns at different levels I created three networks characterised by a different degree of a granularity.

The macro network has only two types of nodes: first, the documents that contain the citations–in this case the green nodes that represent abstracts of the L’APh; and second, the cited authors, the red nodes. A connection between two nodes represents a citation. In the example here, these two precise citations are represented as a connection between the citing documents and the two cited authors, Pliny and Vergil.

The meso network is more granular: in addition to the cited authors also the cited works are displayed. In this example, the Naturalis Historia and the Georgics are represented as two orange nodes.

Finally, at the micro-level the network contains also single cited passage in addition to authors and works.

APh Micro Level

[interactive visualization available at phd.mr56k.info/data/viz/micro]

The micro-level network, which is shown in this slide, is too granular to let certain patterns emerge, but it’s extremely useful in other cases, for example when searching for information.

This network tends to be very sparse, meaning that nodes are not highly connected with each other, and few documents are citing the very same text passage (and are therefore connected). At the same time, this sparseness makes the few connections that are present extremely valuable. In fact, such a sparse network is very useful especially when searching for publications that are related to a specific text passage or publications that discuss a specific set of parallel passages.

These two documents, for example, are likely to be closely related to each other as they both cite the same two passages from the third book of the Georgics. And the same is true for these other two documents both containing a citation to line 9 of Aristophanes’ Acharnians.

APh: Macro Level

[interactive visualization available at phd.mr56k.info/data/viz/macro]

This other slide shows the macro-level network which is created out of the citations extracted from the L’APh sample. The size of the red nodes, which represent ancient authors, is proportional to the number of citing documents, whereas the thickness of the connections between nodes depends on the number of times the author is cited. The isolated, faded out nodes are the documents from the L’APh without citations and are displayed here just to give an idea of their relatively small number.

When looking at the same network of citations, but at the macro level, the overall picture looks very different. Looking at it from this perspective it is possible to see already the centrality of Vergil, with 29 citing documents. Similarly, what emerges is a group of abstracts that discuss Aristophanes in relation to Euripides. This is not at all surprising, but it emerges clearly and nicely from this macro-level network.

APh: Meso Level

[interactive visualization available at phd.mr56k.info/data/viz/meso]

The meso-level network provides some more information concerning which authors are cited, but without getting as granular and sparse as the micro-level network.

JSTOR: diachronic trends in MD (1978-2006)

The second aspect in which citation networks differ very much from the traditional indexes of cited passages is the quantitative analysis they allow for.

This diagram is an example of this kind of analysis and shows the number of citations to the 5 most cited authors plotted over time. Here I’ve chosen the 5 most cited author, but one could choose a specific set of authors–for example Lucan, Vergil and Ovid–and analyse how the attention they received varied over time.

This example has some clear limitations: first, some errors of the citation extraction system have resulted in a high number of citations of the Appendix Vergiliana and second, this graph is based on the citations contained in just one journal, but the results would be much more interesting if the whole JSTOR was considered.